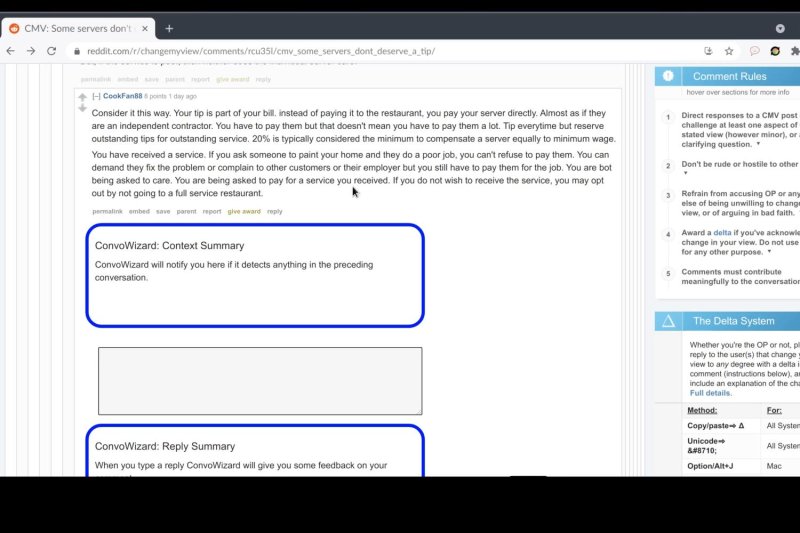

Named ConvoWizard, the Cornell University's new AI tool ConvoWizard can “detect when tensions are escalating and nudge users away from using incendiary language,” the university said in a statement Tuesday. Photo courtesy of Cornell University

Feb. 14 (UPI) -- Researchers at Cornell University have developed and are testing an artificial intelligence tool that can track online conversations in real time, alerting a person before conversations get too heated.

Named ConvoWizard, the AI tool can "detect when tensions are escalating and nudge users away from using incendiary language," the university said in a statement Tuesday.

ConvoWizard creators say the AI tool could be beneficial "to help identify when tense online debates are inching toward irredeemable meltdown."

Researchers are aiming for the ConvoWizard to play a role similar to that of a moderator in online forums.

"Traditionally, platforms have relied on moderators to -- with or without algorithmic assistance -- take corrective actions such as removing comments or banning users. In this work we propose a complementary paradigm that directly empowers users by proactively enhancing their awareness about existing tension in the conversation they are engaging in and actively guides them as they are drafting their replies to avoid further escalation," the research lays out in its opening paragraph.

The tool can both inform a person if and when their conversation is starting to get tense, and apply the same approach to your reply in real time. The program will suggest if a response is likely to elevate tensions, encouraging constructive debate rather than vitriol.

Still in the testing stages, researchers do believe the AI tool has already provided "promising signs that conversational forecasting" can reduce negative interactions in an online environment.

"Well-intentioned debaters are just human. In the middle of a heated debate, in a topic you care about a lot, it can be easy to react emotionally and only realize it after the fact," one of the project's researchers, Jonathan Chang said in a statement.

"There's been very little work on how to help moderators on the proactive side of their work. We found that there is potential for algorithmic tools to help ease the burden felt by moderators and help them identify areas to review within conversations and where to intervene," he told the Cornell Chronicle.