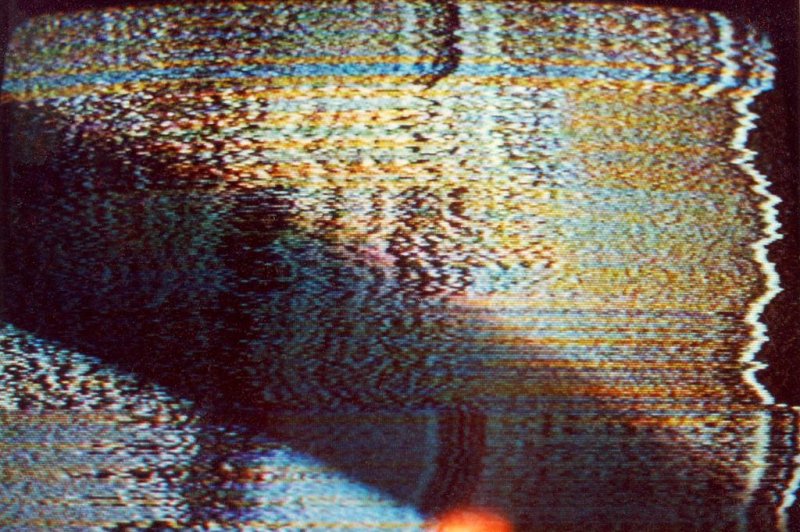

Computer algorithms trained to spot objects can sometimes see things that aren't there in TV static. Photo by Justin March/

Flickr

March 22 (UPI) -- Using the same visual tricks that trip up computers, researchers at Johns Hopkins University have managed to get humans to think like CPUs.

"Most of the time, research in our field is about getting computers to think like people," Chaz Firestone, an assistant professor of psychological and brain sciences at Johns Hopkins, said in a news release. "Our project does the opposite -- we're asking whether people can think like computers."

Computers do a few things much better than humans. They can easily solve complex math problems and remember vast quantities of information. But some tasks that are quite easy for humans, recognizing everyday objects like a dog, bus or a table and chairs, are exceedingly difficult for even the most powerful computers.

However, computers are getting smarter. Artificial intelligence algorithms designed to mimic the human brain's neural processes have helped computers become better visual processors, paving the way for self-driving cars and robots capable of recognizing facial expressions.

But these algorithms aren't foolproof. In fact, they can be rather easily hacked. Certain images -- "adversarial" or "fooling" images -- fail to register with algorithms. The loopholes can be exploited by hackers.

Tweak a few pixels and a computer can easily mistake a train for a banana. Sometimes, computers spot objects that aren't there, spotting cats and birds in the equivalence of television static.

Scientists assumed this trickery was tied to some fundamental difference between the ways computers and human brains process visual information.

"These machines seem to be misidentifying objects in ways humans never would," Firestone said. "But surprisingly, nobody has really tested this. How do we know people can't see what the computers did?"

Firestone decided to test this. He and Zhenglong Zhou, a Johns Hopkins senior majoring in cognitive science, tasked participants with processing images the same way a computer would, using a limited vocabulary for identifying objects.

Study participants were shown pictures that had previously tricked computers and asked to decide whether the image represented the object the computer chose or another random object. More than 90 percent of the participants made the same decision as the computer.

Even when participants were allowed to choose from as many 48 different objects or asked to identify objects in TV static, they agreed with choices of computer algorithms at a rate greater than random chance.

Researchers published the results of their tests in the journal Nature Communications.

"We found if you put a person in the same circumstance as a computer, suddenly the humans tend to agree with the machines," Firestone said. "This is still a problem for artificial intelligence, but it's not like the computer is saying something completely unlike what a human would say."